Category: Blog

Crashlytics plugin-free in Swift with Cocoapods

Abandon all hope ye who enter here

For a while, that was my feeling when it came to using Cocoapods when writing my iOS apps in Swift.

Some would say that, even if I am quite involved in the Swift world right now (check out my latest article on raywenderlich.com on how to build an app like RunKeeper in Swift), I am not the biggest Swift advocate. And it’s mostly because of these little things like Cocoapods that used to work seamlessly and start to become a pain when you switch to Swift.

Something else I really don’t like — and that’s a personality issue I know I am probably never going to get rid of — is intrusive, unnecessary, it-just-works GUIs. Some of us were afraid of clowns, I dreaded Clippy. In these days of iOS development, that fear came back to me in a form I wouldn’t have expected: my crash reporting tool!

Don’t get me wrong. I love me some crash reports! And until XCode 7 and its new Crash Reports service are released, Crashlytics is definitely the best tool out there for your money, especially for your small and/or open source projects. Its killer feature being, let’s be honest about it, that it’s free!

My only issue was, and still is, the plugin Crashlytics wants you to use in order to “Make things simple™”…

There’s a light (over at the Frankenstein place)

Let’s cut to the chase! To all of you who ran into the same issues I ran into, this is our lucky day! Here’s how to get your Swift project set up with Crashlytics using only Cocoapods and without having to use this oh-so-magical plugin.

Adding Crashlytics via Cocoapods

I am going to assume you are all familiar with Cocoapods. So, if you haven’t set it up yet, just add a file named Podfile to the root of your project and fill it with these values:

pod 'Fabric'

pod 'Crashlytics'

Then grab your favorite terminal and type the following command:

$> pod install

If you haven’t installed Cocoapods yet, check this really simple Getting Started tutorial. Once you have done that, open the newly created [PROJECT_NAME].xcworkspace file and you should see a new Pods project there, with these two new pods inside. Unfortunately, because Crashlytics is an Objective-C library, you will need a bridging header.

Everyone loves a Bridging Header

Again, let’s assume you know your way around mix-and-matching Swift and Objective-C inside the same project. If you need more details, just check out Apple’s documentation on having Swift and Objective-C in the same project.

So, create an Objective-C header file and name it [PRODUCT_MODULE_NAME]-Bridging-Header.h. In this file, all you have to do is import Crashlytics like this:

#import <Crashlytics/Crashlytics.h>

You are ready to start using Crashlytics from your Swift code. However, if you try to build your project now, nothing will happen. It’s because you need to tell Fabric that it should start running when your app starts.

Get your API Key and Build Secret

To do so, you will have to get the API Key and Build Secret of your organization from Fabric’s dashboard. Once you have them just add the following Run Script Phase to your build phases:

${PODS_ROOT}/Fabric/Fabric.framework/run [API_KEY] [BUILD_SECRET]

Once this is done, open your AppDelegate and add the following method call to the application(application:, didFinishLaunchingWithOptions launchOptions:) method:

Crashlytics.startWithAPIKey([YOUR_API_KEY])

This will start Crashlytics when your app starts.

Build & Run

That’s it! Now build and run your project and your app should directly show in your dashboard!

Wrapping Up

I hope this little tutorial helped you integrate seamlessly with Crashlytics. One of the good things is, if you want to continue using the plugin (for beta distributions for example, which works pretty well I’ll admit) you still can! This integration is 100% compatible with it!

If you have any questions or issues with what’s been told here, feel free to reach out to me on Twitter at @Zedenem.

Finally, I couldn’t have fixed my issues and written this article without these two resources:

- @danlew42‘s article on Setting up Crashlytics without the IDE plugin on Android

- @stevenhepting‘s answer to this Stack Overflow question on using Crashlytics without plugin via Cocoapods

Hope this will help,

How to handle an audio output change on iOS

Practical test

This was working as of October 2014:

- Launch the Youtube app on an iPhone

- Plug headphones

- Launch a video that has a preroll ad (depending on capping, it should be quite easy to find one that has it)

- While the ad is playing, unplug your headphones

The ad should have paused and you have no way of unpausing it or accessing the video you wanted to see. You might try to plug the headphones back but it won’t work. Now, when you do the same thing on an actual video, you get the expected behavior:

- While playing a video, unplugging headphones pauses the video and lets you unpause it

- While playing a video, plugging headphones doesn’t interrupt the video

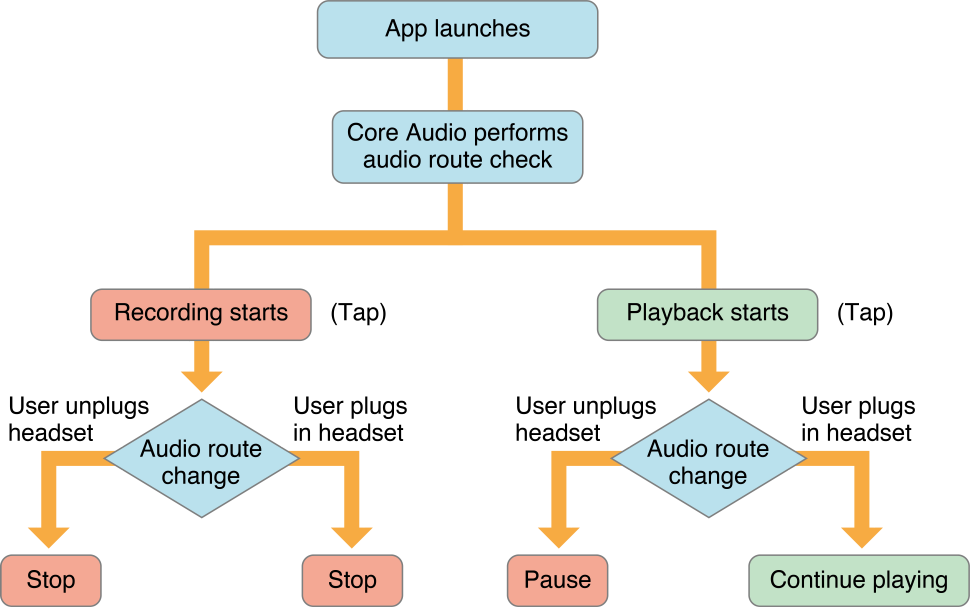

This is explained in Apple’s documentation in a pretty straight-forward way:

You might think: “well if the player (here the Ad uses AVPlayer) paused, it should have sent a notification telling my code that its state changed. Fixing this issue is just a matter of getting this state change and acting according to it.”

Well, if that was the case I wouldn’t have to write an article about it, would I!

The thing is, Apple’s documentation (See Audio Session Programming Guide: Responding To Route Changes) is pretty clear about the matter but nobody ever wrote a practical example on how to use Audio Output notifications to check if headphones have been plugged while playing a video.

So after digging the web, and especially Stack Overflow for a practical solution that does not exist, here is my take at it! Hope it will help!

Audio Output Route Changed Notification

Here is what iOS gives us to listen and act upon audio output route changes:

AVAudioSessionRouteChangeNotification

So, we just have to subscribe to it and we will know every time the audio output route changes. Add yourself as an observer:

[[NSNotificationCenter defaultCenter] addObserver:self selector:@selector(audioHardwareRouteChanged:) name:AVAudioSessionRouteChangeNotification object:nil];

Then implement the method you passed as a selector:

- (void)audioHardwareRouteChanged:(NSNotification *)notification {

// Your tests on the Audio Output changes will go here

}

Using the notification’s user info to understand what happened

The AVAudioSessionRouteChangeNotification provides two important objects:

-

An

AVAudioSessionRouteDescriptionobject containing the previous route’s description. Accessible via the keyAVAudioSessionRouteChangePreviousRouteKey -

An

NSNumberobject containing anunsigned integerthat identifies the reason why the route changed. Accessible via the keyAVAudioSessionRouteChangeReasonKey

If you just want to know when the headphones were unplugged, you should use this code:

NSInteger routeChangeReason = [notification.userInfo[AVAudioSessionRouteChangeReasonKey] integerValue];

if (routeChangeReason == AVAudioSessionRouteChangeReasonOldDeviceUnavailable) {

// The old device is unavailable == headphones have been unplugged

}

Here, you don’t need to check if the previous route were the headphones or not, because the only thing you want to know (and the logic behind iOS pausing the video when the headphones are unplugged) is this: When the device used for output becomes unavailable, pause.

This is great because that means your code will also work in other cases, like if you use bluetooth headphones and you walk out of range of the app, or their batteries die. Also, it won’t pause when you plug headphones back, because this is considered by the OS as a new device becoming available, sending the reason AVAudioSessionRouteChangeReasonNewDeviceAvailable

To go further

The AVAudioSessionRouteChangeNotification‘s associated user info is full of interesting data on what happened to the audio output route, and the different routes available and recognized by iOS. Be sure to check Apple’s documentation and especially:

- Audio Session Programming Guide: Responding to Route Changes

- AVAudioSessionRouteChangeNotification Documentation

From there, you should be able to access all the documentation you need to, for example, detect when the device is connected to a docking station, etc.

Hope this will help,

Using NSURLProtocol with Swift

Today, my first article as a member of raywenderlich.com‘s Update Team went live! It’s a piece on how to play with NSURLProtocol in Swift, the new programming language Apple released this june at WWDC14. Here it is, hope you will enjoy it: Using NSURLProtocol with Swift

XML Parser for iOS is now featured on Cocoa Controls !

Very recently, in one of my previous contracts, I had to develop a tool to easily convert an XML flux to a JSON structure and I made a little XML to JSON Parser library available on Github: https://github.com/Zedenem/XMLParser

I decided to submit this library to Cocoa Controls and, as of today, it has been reviewed, accepted and made available here: https://www.cocoacontrols.com/controls/xmlparser

It is the second of my libraries to be made available on this really select iOS Controls site ! The first one was a take at making a circular UISlider (https://www.cocoacontrols.com/controls/uicircularslider) and there is more to come.

If you wonder when to use a parser like this one, here is a summary of the context where I thought it was necessary to program it :

I was working for a client’s iOS app that was making a lot of use of REST Web Services, getting and posting JSON requests both to display datas and interact with the server. At some point, I discovered that part of the Web Services weren’t conforming to the global specification and were sending back XML data structures instead of JSON.

No one knew about it when we first conceived our app data model and the problem was that I had to use the XML structured datas the same way as I used the JSON ones (both when displaying it and when posting requests to the server). Given the state of the project and the available time, I decided that it was better not to do specific work dealing with the XML and better try, as soon as I get it from the server, to convert it to JSON and “hide” the complexity behind a conversion parser.

That’s how the XMLParser library was born.

If you want to know more about how it is built and how the conversion is made, there is a README file inside the Git Repository: https://github.com/Zedenem/XMLParser/blob/master/README.md

Article image used from Blackbird Interactive’s website : http://blackbirdi.com/wp-content/uploads/2010/12/json-xml.jpg

RESTful Web Services Exposition via Play 2.x framework and their access through an iOS app – Part 1/2

Introduction

This article is a two parts step-by-step tutorial on how to easily expose RESTful web services using Play 2.x Framework and consume them in an iOS app using AFNetworking.

In this first part, I will explain how to easily expose your apps datas in JSON format via a REST Web Service, using the Play 2.x framework.

In the second part to come, I will give you some details on how to access the web services through your iOS app.

Step by Step

Create your Play Application

I will suppose that you already have a running installation of Play 2.x framework on your machine. If you don’t, here is a link to the Play Framework documentation, which provides a great Getting Started tutorial : http://www.playframework.com/documentation/2.1.1/Home.

To create your Play Application, just run this command on your favorite folder:

$> play new helloWeb

Play will now ask you two questions:

What is the application name? [helloWeb]

>

Which template do you want to use for this new application?

1 - Create a simple Scala application

2 - Create a simple Java application

> 2

Just press Enter to answer the first question and type in 2 to the second to choose the Java template. Play will now say it better than me:

OK, application helloWeb is created. Have fun!

Write your Web Service method

We are now going to write a simple method that returns a JSON result. Go to your controllers folder (./app/controllers) and open the Application.java file.

Application.java must contain its default definition which renders your index.html page:

package controllers;

import play.*;

import play.mvc.*;

import views.html.*;

public class Application extends Controller {

public static Result index() {

return ok(index.render("Your new application is ready."));

}

}

We are going to add our method, calling it helloWeb(). Just add the following method below index():

public static Result helloWeb() {

ObjectNode result = Json.newObject();

result.put("content", "Hello Web");

return ok(result);

}

Here are the steps taken to create this simple method:

- Create a new JSON

ObjectNodecalledresult - Put a String object

"Hello Web"for the key"content" - Return

resultvia theok()method to associate it via a200HTTP Status Code

To make it work, we will need to add these two imports:

import org.codehaus.jackson.node.ObjectNode; import play.libs.Json;

That’s it ! We created our Web Service method, now all we need is to expose it!

Expose your Web Service route

Last step : go to your conf folder (./app/conf) and open the routes file.

routes must contain its default definition which declares two routes, one for the Home page and another for all your assets:

# Routes # This file defines all application routes (Higher priority routes first) # ~~~~ # Home page GET / controllers.Application.index() # Map static resources from the /public folder to the /assets URL path GET /assets/*file controllers.Assets.at(path="/public", file)

All we have to do now is to declare our Web Service’s route, just like this :

# Hello Web (JSON Web Service) GET /helloWeb controllers.Application.helloWeb()

Done! You can access your newly created Web Service at its declared URL path. By default:

http://localhost:9000/helloWeb

Which should display :

{

"content":"Hello Web"

}

Discussion

Now that you know how to expose web services, what about code organization? Where would you or do you put the WS declarations? One or several of your existing controllers? A specific one?

I would greatly like to have others opinion on this, so feel free to leave a comment.

Apple : the art of driving behaviour

At WWDC 2013, Apple started its keynote address with a video describing its intentions as a products manufacturer and introducing its new campaign “Designed by Apple in California”.

What a better example of what Simon Sinek explains at TEDxPugetSound about driving behaviour ?

“People don’t buy what you do, they buy why you do it.”

In this talk, Simon Sinek explains how great leaders inspire actions by reversing the usual chain of communication from “What we do” to “How we do it” with sometimes a hint of “Why we do it” to a Why-first communication. He calls these three levels “What, How, Why” the golden circle. Simon Sinek takes the example of Apple’s way of selling a computer :

- From the inside out (What – How – Why):

- “We make great computers” – What

- “They’re beautifully design, simple to use and user friendly” – How

- “Wanna buy one ?”

- From the outside in (Why – How – What):

- “Everything we do, we believe in challenging the status quo, we believe in thinking differently” – Why

- “The way we challenge the status quo is by making our products beautifully designed, simple to use and user friendly.” – How

- “We just happens to make great computers.” – What

- “Wanna buy one ?” Sounds familiar ?

The Golden Circle

iOS URL Schemes Parameters : What about standardization ?

On Saturday June 8th, I was attending the App.net hackathon in San Francisco (the article is coming soon…) and I saw a presentation about a way to standardize the parameters passed from app to app via iOS URL Schemes, called x-callback-url

If you want to learn more about this, the specification is here : http://x-callback-url.com/specifications/

And here is the associated blog where I learned, among other things, that Tumblr integrates x-callback-url : http://x-callback-url.com/blog/

You can also follow the project via Twitter : @xcallbackurl

I will certainly try to use this next time I am struggling with URL schemes, just to see if the standard is efficient and comprehensible.

What do you think about it ? Do you know other people trying to standardize inter-app communications ? Do you or would you use it ?

WWDC 2013

Apple’s WWDC 2013 takes place from June 10th to 14th at Moscone West, San Francisco.

I will be there enjoying conferences, labs, partys and meeting fellow developers and entrepreneurs.

And, well, I’m also planning on taking my sweet time…

Stay in touch with me here or on the social networks for some news (that is to say, as far as the NDA doesn’t urge me to keep my mouth shut…)

Hello world!

Welcome to the new version of Zedenem.com

Here are the new sections you will discover clicking around :

- Blog : Where I will be able to give you quick updates on what I am doing, starting with a coverage of Apple’s WWDC 2013

- Apps : Updates on the apps I am doing and a history of what I have done (under construction)

- Articles : Some technical articles on various subjects I am trying to hack (under construction)

- Hackathons : A special section to cover the hackathons I’m attending and the projects I develop during them (under construction)

Other things will be coming in the future too, stay tuned !

Please enjoy and feel free to give me feedback.

Zouhair.